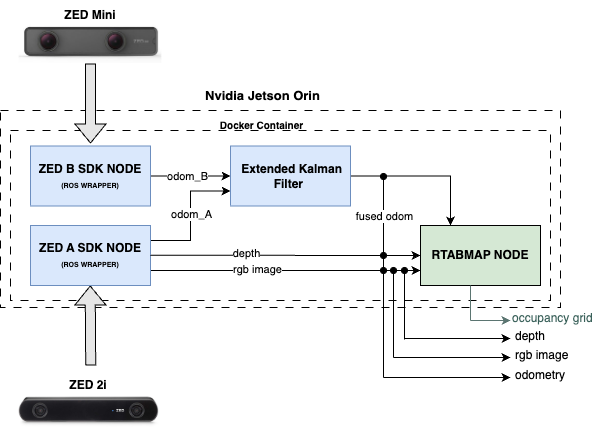

This was a university project for the Human Centered Robotics Course with a Team of 10 students. I was leading the Perception Team. We used two Stereovision Cameras to produce Pointcloud data, Object Detection Bounding Boxes and Odometry Data for the Navigation Subsystems of the Robot.

Here is the project abstract:

"Wheel-E is a smart and autonomous wheelchair aimed at children with severe disabilities. The system includes three unique features: a social navigation system that applies proxemics and ’social awareness’ metrics to the autonomous path planning; a sentiment analysis model which classifies the user state and allows the wheelchair to adjust the velocity accordingly; a laser pointer that functions as a directional indicator of the wheelchair’s movements. Wheel-E is expected to improve the wheelchair user’s comfort navigating social environments, whilst enhancing the level of trust in the system of external people interacting with it. This report will start by analysing the current research trends when developing smart wheelchairs, then describe the design, development and testing of our system, and, finally, evaluate the research hypotheses investigated."